The biggest expansion of models to date, new

inference optimization tools, and additional data capabilities give

customers even greater flexibility and control to build and deploy

production-ready generative AI faster

At AWS re:Invent, Amazon Web Services, Inc. (AWS), an

Amazon.com, Inc. company (NASDAQ: AMZN), today announced new

innovations for Amazon Bedrock, a fully managed service for

building and scaling generative artificial intelligence (AI)

applications with high-performing foundation models. Today’s

announcements reinforce AWS’s commitment to model choice, optimize

how inference is done at scale, and help customers get more from

their data.

This press release features multimedia. View

the full release here:

https://www.businesswire.com/news/home/20241204780931/en/

Discover Amazon Bedrock Marketplace

models and Amazon Bedrock fully managed models in the new model

catalog (Graphic: Business Wire)

- AWS will soon be the first cloud provider to offer models from

Luma AI and poolside. AWS will also add the latest Stability AI

model in Amazon Bedrock and, through the new Amazon Bedrock

Marketplace capability, is giving access to more than 100 popular,

emerging, and specialized models, so customers can find the right

set of models for their use case.

- New prompt caching and Amazon Bedrock Intelligent Prompt

Routing help customers more easily and cost effectively scale

inference.

- Support for structured data and GraphRAG in Amazon Bedrock

Knowledge Bases further expands how customers can leverage their

data to deliver customized generative AI experiences.

- Amazon Bedrock Data Automation automatically transforms

unstructured, multimodal data into structured data with no coding

required—helping customers use more of their data for generative AI

and analytics.

- Tens of thousands of customers trust Amazon Bedrock to run

their generative AI applications as the service has grown its

customer base by 4.7x in the last year. Adobe, Argo Labs, BMW

Group, Octus, Symbeo, Tenovos, and Zendesk are already adopting the

latest Amazon Bedrock advancements.

“Amazon Bedrock continues to see rapid growth as customers flock

to the service for its broad selection of leading models, tools to

easily customize with their data, built-in responsible AI features,

and capabilities for developing sophisticated agents,” said Dr.

Swami Sivasubramanian, vice president of AI and Data at AWS.

“Amazon Bedrock is helping to tackle the biggest roadblocks

developers face today, so customers can realize the full potential

of generative AI. With this new set of capabilities, we are

empowering customers to develop more intelligent AI applications

that will deliver greater value to their end users.”

The broadest selection of models from leading AI

companies

Amazon Bedrock provides customers with the broadest selection of

fully managed models from leading AI companies, including AI21

Labs, Anthropic, Cohere, Meta, Mistral AI, and Stability AI.

Additionally, Amazon Bedrock is the only place customers can access

the newly announced Amazon Nova models, a new generation of

foundation models that deliver state-of-the-art intelligence across

a wide range of tasks and industry-leading price performance. With

today’s announcements, AWS is further expanding model choice in

Amazon Bedrock with the addition of more industry-leading

models.

- Luma AI’s Ray 2: Luma AI’s multimodal models and

software products advance video content creation with generative

AI. AWS will be the first cloud provider to make Luma AI’s

state-of-the-art Luma Ray 2 model, the second generation of its

renowned video model, available to customers. Ray 2 marks a

significant advancement in generative AI-assisted video creation,

generating high-quality, realistic videos from text and images with

efficiency and cinematographic quality. Customers can rapidly

experiment with different camera angles and styles, create videos

with consistent characters and accurate physics, and deliver

creative outputs for architecture, fashion, film, graphic design,

and music.

- poolside’s malibu and point: poolside addresses the

challenges of modern software engineering for large enterprises.

AWS will be the first cloud provider to offer access to poolside’s

malibu and point models, which excel at code generation, testing,

documentation, and real-time code completion. This empowers

engineering teams to improve productivity, write better code

faster, and accelerate product development cycles. Both models can

also be fine-tuned securely and privately on customers’ codebases,

practices, and documentation, enabling them to adapt to specific

projects and helping customers tackle daily software engineering

tasks with increased accuracy and efficiency. Additionally, AWS

will be the first cloud provider to offer access to poolside’s

Assistant, which puts the power of poolside’s malibu and point

models inside of developers' preferred integrated development

environments (IDEs).

- Stability AI’s Stable Diffusion 3.5 Large: Stability AI

is a leading generative AI model developer in visual media, with

cutting-edge models in image, video, 3D, and audio. Amazon Bedrock

will soon add Stable Diffusion 3.5 Large, Stability AI’s most

advanced text-to-image model. This new model generates high-quality

images from text descriptions in a wide range of styles to

accelerate the creation of concept art, visual effects, and

detailed product imagery for customers in media, gaming,

advertising, and retail.

Access more than 100 popular, emerging, and specialized

models with Amazon Bedrock Marketplace

While the models in Amazon Bedrock can support a wide range of

tasks, many customers want to incorporate emerging and specialized

models into their applications to power unique use cases, like

analyzing a financial document or generating novel proteins. With

Amazon Bedrock Marketplace, customers can now easily find and

choose from more than 100 models that can be deployed on AWS and

accessed through a unified experience in Amazon Bedrock. This

includes popular models such as Mistral AI’s Mistral NeMo Instruct

2407, Technology Innovation Institute’s Falcon RW 1B, and NVIDIA

NIM microservices, along with a wide array of specialized models,

including Writer’s Palmyra-Fin for the financial industry,

Upstage’s Solar Pro for translation, Camb.ai’s text-to-audio MARS6,

and EvolutionaryScale’s ESM3 generative model for biology.

Once a customer finds a model they want, they select the

appropriate infrastructure for their scaling needs and easily

deploy on AWS through fully managed endpoints. Customers can then

securely integrate the model with Amazon Bedrock’s unified

application programming interfaces (APIs), leverage tools like

Guardrails and Agents, and benefit from built-in security and

privacy features.

Zendesk is a global service software company with a diverse and

multicultural customer base of 100,000 brands around the world. The

company can use specialized models, like Widn.AI for translation,

in Amazon Bedrock to personalize and localize customer service

requests across email, chat, phone, and social media. This will

provide agents with the data they need, such as sentiment or intent

in the customer’s native language, to ultimately enhance the

customer service experience.

Prompt caching and Intelligent Prompt Routing help customers

tackle inference at scale

When selecting a model, developers need to balance multiple

considerations, like accuracy, cost, and latency. Optimizing for

any one of these factors can mean compromising on the others. To

balance these considerations when deploying an application into

production, customers employ a variety of techniques, like caching

frequently used prompts or routing simple questions to smaller

models. However, using these techniques is complex and

time-consuming, requiring specialized expertise to iteratively test

different approaches to ensure a good experience for end users.

That is why AWS is adding two new capabilities to help customers

more effectively manage prompts at scale.

- Lower response latency and costs by caching prompts:

Amazon Bedrock can now securely cache prompts to reduce repeated

processing, without compromising on accuracy. This can reduce costs

by up to 90% and latency by up to 85% for supported models. For

example, a law firm could create a generative AI chat application

that can answer lawyers’ questions about documents. When multiple

lawyers ask questions about the same part of a document in their

prompts, Amazon Bedrock could cache that section, so the section

only gets processed once and can be reused each time someone wants

to ask a question about it. This reduces the cost by shrinking the

amount of information the model needs to process each time. Adobe’s

Acrobat AI Assistant enhances user productivity by enabling quick

document summarization and question answering. With prompt caching

on Amazon Bedrock, Adobe observed a 72% reduction in response time,

based on preliminary testing.

- Intelligent Prompt Routing helps optimize response quality

and cost: With Intelligent Prompt Routing, customers can

configure Amazon Bedrock to automatically route prompts to

different foundation models within a model family, helping them

optimize for response quality and cost. Using advanced prompt

matching and model understanding techniques, Intelligent Prompt

Routing predicts the performance of each model for each request and

dynamically routes requests to the model most likely to give the

desired response at the lowest cost. Intelligent Prompt Routing can

reduce costs by up to 30% without compromising on accuracy. Argo

Labs, which delivers innovative voice agent solutions for

restaurants, handles diverse customer inquiries and reservations

with Intelligent Prompt Routing. As customers submit questions,

place orders, and book tables, Argo Labs’ voice chatbot dynamically

routes the query to the most suitable model, optimizing for both

cost and quality of responses. For example, a simple yes-no

question, like “Do you have an open table at this restaurant

tonight?” could be handled by a smaller model, while a larger model

could answer more complex questions such as, “What kind of vegan

options does this restaurant provide?” With Intelligent Prompt

Routing, Argo Labs can use their voice agents to seamlessly handle

customer interactions, while achieving the right balance of

accuracy and cost.

Two new capabilities for Amazon Bedrock Knowledge Bases help

customers maximize the value of their data

Customers want to leverage their data, no matter where, or in

what format, it resides to build unique generative AI-powered

experiences for end users. Knowledge Bases is a fully managed

capability that makes it easy for customers to customize foundation

model responses with contextual and relevant data using retrieval

augmented generation (RAG). While Knowledge Bases already makes it

easy to connect to data sources like Amazon OpenSearch Serverless

and Amazon Aurora, many customers have other data sources and data

types they would like to incorporate into their generative AI

applications. That is why AWS is adding two new capabilities to

Knowledge Bases.

- Support for structured data retrieval accelerates generative

AI app development: Knowledge Bases provides one of the first

managed, out-of-the-box RAG solutions that enables customers to

natively query their structured data where it resides for their

generative AI applications. This capability helps break data silos

across data sources, accelerating generative AI development from

over a month to just days. Customers can build applications that

use natural language queries to explore structured data stored in

sources like Amazon SageMaker Lakehouse, Amazon S3 data lakes, and

Amazon Redshift. With this new capability, prompts are translated

into SQL queries to retrieve data results. Knowledge Bases

automatically adjusts to a customer’s schema and data, learns from

query patterns, and provides a range of customization options to

further enhance the accuracy of their chosen use case. Octus, a

credit intelligence company, will use the new structured data

retrieval capability in Knowledge Bases to allow end users to query

structured data using natural language. By connecting Knowledge

Bases to Octus’ existing master data management system, end-user

prompts can be translated into SQL queries that Amazon Bedrock uses

to retrieve the relevant information and return it to the user as

part of the application’s response. This will help Octus’ chatbots

deliver precise, data-driven insights to its users and enhance the

users’ interactions with the company’s array of data products.

- Support for GraphRAG generates more relevant responses:

Knowledge graphs allow customers to model and store relationships

between data by mapping different pieces of relevant information

like a web. These knowledge graphs can be particularly useful when

incorporated into RAG, allowing a system to easily traverse and

retrieve relevant parts of information by following the graph. Now,

with support for GraphRAG, Knowledge Bases can enable customers to

automatically generate graphs using Amazon Neptune, AWS’s managed

graph database, and link relationships between entities across

data, without requiring any graph expertise. Knowledge Bases makes

it easier to generate more accurate and relevant responses,

identify related connections using the knowledge graph, and view

the source information to understand how a model arrived at a

specific response. BMW Group will implement GraphRAG for My AI

Assistant (MAIA), an AI-powered virtual assistant that helps users

find, understand, and integrate the company’s internal data assets

hosted on AWS. With GraphRAG’s automated graph modeling powered by

Amazon Neptune, BMW will be able to continuously update the

knowledge graph powering MAIA based on data usage to provide more

relevant and comprehensive insights from its data assets to

continue creating premium experiences for millions of drivers.

Amazon Bedrock Data Automation transforms unstructured

multimodal data into structured data for generative AI and

analytics

Today, most enterprise data is unstructured and is contained in

content like documents, videos, images, and audio files. Many

customers want to take advantage of this data to discover insights

or build new experiences for customers, but it is often a

difficult, manual process to convert it into a format that can be

easily used for analytics or RAG. For example, a bank may take in

multiple PDF documents for loan processing and need to extract

details from each one, normalize features like name or date of

birth for consistency, and transform the results into a text-based

format before entering them into a data warehouse to perform any

analyses. With Amazon Bedrock Data Automation, customers can

automatically extract, transform, and generate data from

unstructured content at scale using a single API.

Amazon Bedrock Data Automation can quickly and cost effectively

extract information from documents, images, audio, and videos and

transform it into structured formats for use cases like intelligent

document processing, video analysis, and RAG. Amazon Bedrock Data

Automation can generate content using predefined defaults, like

scene-by-scene descriptions of video stills or audio transcripts,

or customers can create an output based on their own data schema

that they can then easily load into an existing database or data

warehouse. Through an integration with Knowledge Bases, Amazon

Bedrock Data Automation can also be used to parse content for RAG

applications, improving the accuracy and relevancy of results by

including information embedded in both images and text. Amazon

Bedrock Data Automation provides customers with a confidence score

and grounds its responses in the original content, helping to

mitigate the risk of hallucinations and increasing

transparency.

Symbeo is a CorVel company that offers automated accounts

payable solutions. The company will use Amazon Bedrock Data

Automation to automate the extraction of data from complex

documents, such as insurance claims, medical bills, and more. This

will help Symbeo’s teams process claims faster and accelerate the

turnaround time to get back to their customers. Tenovos, a digital

asset management platform, is using Amazon Bedrock Data Automation

to enable semantic search at scale to increase content reuse by 50%

or more, saving millions in marketing expenses.

Amazon Bedrock Marketplace is available today. Inference

management capabilities, structured data retrieval and GraphRAG in

Amazon Bedrock Knowledge Bases, and Amazon Bedrock Data Automation

are all available in preview. Models from Luma AI, poolside, and

Stability AI are coming soon.

To learn more, visit:

- The AWS News Blog for details on today’s announcements: Amazon

Bedrock Marketplace, prompt caching and Intelligent Prompt Routing,

and data processing and retrieval capabilities.

- The Amazon Bedrock page to learn more about the

capabilities.

- The Amazon Bedrock customer page to learn how companies are

using Amazon Bedrock.

- The AWS re:Invent page for more details on everything happening

at AWS re:Invent.

About Amazon Web Services

Since 2006, Amazon Web Services has been the world’s most

comprehensive and broadly adopted cloud. AWS has been continually

expanding its services to support virtually any workload, and it

now has more than 240 fully featured services for compute, storage,

databases, networking, analytics, machine learning and artificial

intelligence (AI), Internet of Things (IoT), mobile, security,

hybrid, media, and application development, deployment, and

management from 108 Availability Zones within 34 geographic

regions, with announced plans for 18 more Availability Zones and

six more AWS Regions in Mexico, New Zealand, the Kingdom of Saudi

Arabia, Taiwan, Thailand, and the AWS European Sovereign Cloud.

Millions of customers—including the fastest-growing startups,

largest enterprises, and leading government agencies—trust AWS to

power their infrastructure, become more agile, and lower costs. To

learn more about AWS, visit aws.amazon.com.

About Amazon

Amazon is guided by four principles: customer obsession rather

than competitor focus, passion for invention, commitment to

operational excellence, and long-term thinking. Amazon strives to

be Earth’s Most Customer-Centric Company, Earth’s Best Employer,

and Earth’s Safest Place to Work. Customer reviews, 1-Click

shopping, personalized recommendations, Prime, Fulfillment by

Amazon, AWS, Kindle Direct Publishing, Kindle, Career Choice, Fire

tablets, Fire TV, Amazon Echo, Alexa, Just Walk Out technology,

Amazon Studios, and The Climate Pledge are some of the things

pioneered by Amazon. For more information, visit amazon.com/about

and follow @AmazonNews.

View source

version on businesswire.com: https://www.businesswire.com/news/home/20241204780931/en/

Amazon.com, Inc. Media Hotline Amazon-pr@amazon.com

www.amazon.com/pr

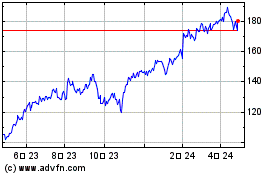

Amazon.com (NASDAQ:AMZN)

過去 株価チャート

から 11 2024 まで 12 2024

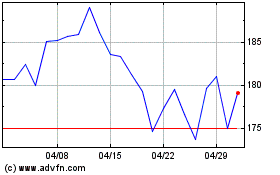

Amazon.com (NASDAQ:AMZN)

過去 株価チャート

から 12 2023 まで 12 2024